LLM Search Optimization Guide for 2026: How to Rank your Ecommerce Brand in AI Searches?

TL;DR

- The “answer engines” (ChatGPT, Google AI Overviews, Perplexity, Brave) pick a handful of sources — not thousands. Your job isn’t to “rank”; it’s to be cited (or be the single answer).

- Give AI systems clean access (robots.txt), machine-readable product data (schema), and answer-ready content. Measure crawls, inclusion, and mentions monthly.

- Allow reputable AI crawlers (OpenAI, Perplexity, Common Crawl), and understand Google-Extended only governs Gemini training/grounding—not AI Overviews’ inclusion.

- Expect behavior shifts: AI Overviews is already at massive reach and compresses click-through; your brand must be present in the overview/answer.

- Build authority where LLMs look: credible sources, structured product/FAQ content, and authentic forum/UGC participation (yes, including Reddit).

Why is LLM Search Optimization Crucial?

Google’s AI Overviews (and AI Mode) are rolling out broadly; LLM answer engines like ChatGPT, Perplexity and Claude keep growing. The result: more queries yield one synthesized response with a few citations—meaning visibility consolidates to the “mentioned few.” If you’re not in that short list, the organic opportunity evaporates.

Compounding this: multiple studies show AI surfaces cite a different mix of sources than classic SEO—heavy on Reddit/UGC for some engines, Wikipedia for others. Optimizing for LLM search doesn’t mean copy-pasting your Google playbook.

How LLM Answer Engines Actually Pick Sources

- ChatGPT / OpenAI Search experiences

Uses OpenAI crawlers (e.g., ChatGPT-User when a user triggers browsing; OAI-SearchBot for search indexing). Access depends on your robots.txt and page quality. (OpenAI Platform)

- Google AI Overviews / AI Mode

Generated from Google’s index + AI layer. You can’t “opt out” of Overviews via Google-Extended; that token only controls Gemini training/grounding usage. Inclusion still relies on normal Search quality signals and structured clarity. (Google for Developers)

- Perplexity

Runs live web retrieval with its own crawler (PerplexityBot) and shows citations. Open access in robots.txt helps inclusion. (Perplexity, Perplexity AI)

- Common Crawl & the broader ecosystem

CCBot builds open web snapshots used across AI research/training. (commoncrawl.org)

The 5 Step Framework for LLM visibility

Step 1 — Crawlability & Controls

Make sure key pages are fetchable by Bingbot/Googlebot and reputable AI bots (OpenAI, Perplexity, CCBot). Validate access in server logs. Sample robots.txt (edit to your policy):

User-agent: *

Disallow: /admin/ /cart/ /checkout/ /internal-search

Sitemap: https://www.example.com/sitemap.xml

User-agent: GPTBot

Allow: /

User-agent: OAI-SearchBot

Allow: /

User-agent: PerplexityBot

Allow: /

User-agent: CCBot

Allow: /

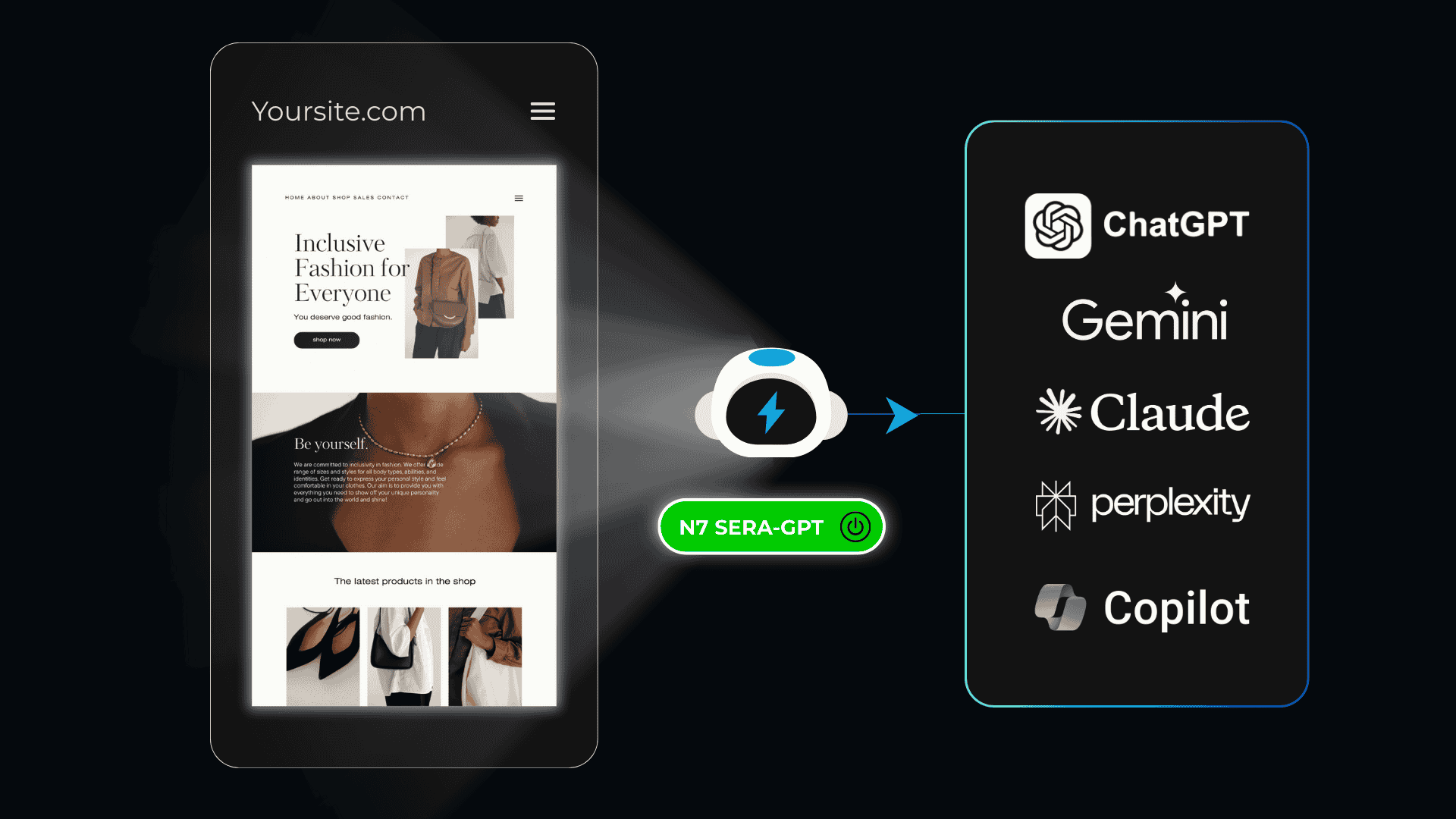

Want to ensure your site is crawlable by all the AI bots? Explore SERA-GPT!

Step 2 — Answer-ready Content

Write pages that answer the questions shoppers ask AI: “Is it machine-washable?”, “Safe for 3-year-olds?”, “Under ₹1500?”. Add short Q&A blocks/subheads and comparison tables. Create buying guides like “Best breathable cotton sarees for humid climates” that internally link to product detail pages (PDPs).

Step 3 — Reliable Structure (Schema)

Implement JSON-LD for Product (price, availability, ratings), FAQPage, BreadcrumbList. This helps parsers turn your site into a database.

Step 4 — Evidence & Authority

Earn mentions where engines look: expert reviews, credible lists, and authentic UGC (Reddit/Quora). Target placements that LLMs cite disproportionately.

Step 5 — Speed & Experience

Core Web Vitals still matter—both for Google inclusion and as a quality proxy many engines respect. Treat performance as table stakes for being excerpted or summarized.

A practical 10-step checklist (eCommerce & D2C-specific)

- Set up access & verification: Google Search Console + Bing Webmaster Tools. Use URL submission and Crawl Control to ensure fast discovery without performance hits.

- Robots.txt & bot hygiene: Explicitly allow the AI crawlers you trust (OpenAI, Perplexity, CCBot). Monitor with logs or bot analytics.

- Site speed and clean HTML: Prioritize CLS/LCP/INP; reduce client-side rendering that hides content from fetchers.

- Schema everywhere it counts: Product, Offer, AggregateRating, Review, FAQPage. Keep price/availability fresh.

- Answer-centric PDPs: Add 6–10 customer-language Q&As per PDP (“Does it spill?”, “Return window?”). Link to care guides and sizing. (This mirrors the “conversation” LLMs try to resolve.)

- Evergreen buying guides + comparison hubs: Category “Best of” pages and seasonal guides that A) demonstrate expertise, B) cite sources, C) internal-link to PDPs.

- Authority building where LLMs shop for sources: Secure credible third-party mentions and seed authentic discussions in relevant subreddits/quora. Plan for Reddit to be a top citation source in some engines.

- Syndicate your catalog: Keep Google Merchant Center/Bing feeds pristine; align PDP content with feed attributes. (Perplexity often reference pages those ecosystems surface.)

- Audit inclusion monthly: Ask each engine targeted prompts, record whether your brand or PDP appears/cited, and log changes. Track crawler hits from GPTBot/OAI-SearchBot/PerplexityBot/CCBot.

- Govern the gray areas: Given recent allegations around scraping behavior from some players, adopt a written policy on which bots you permit and why, balancing reach with content protection.

What Metrics to Measure & Optimize for your D2C Brand

Still measuring the brand visibility for GPT searches is a black-box. These are some of the important metrics which you can start tracking manually or with the help of tools that we have called out in the list.

- Answer Inclusion Rate (a.k.a. LLM Mention Share) - % of target prompts where your brand or PDP is named/cited in AI answers.

- Citation Share of Voice (C-SOV) - Your share of all citations in an answer versus competitors.

- Source Footprint & Authority - Count and quality of third-party pages LLMs frequently cite that also mention/link to you (buying guides, expert lists, credible forums).

How to measure: Ahrefs/Semrush/Moz (referring domains, Top pages)

- Crawler Access & Coverage (AI Bots)

What it shows: Whether reputable AI crawlers reach key PDPs/category hubs.

How to measure: Use tools such as SERA-GPT to ensure you website is being crawled by AI Bots to show up in AI recommendations. Check server/WAF logs via your CDN; filter by GPTBot, OAI-SearchBot, PerplexityBot, CCBot. Validate robots.txt with Screaming Frog/Sitebulb “List Mode.”

- Speed & Renderability (Core Web Vitals)

What it shows: User-centric performance signals LLMs and search systems treat as quality proxies (LCP, INP, CLS).

How to measure: Search Console CWV report

- PageSpeed Insights/Lighthouse CI for lab, Web-Vitals JS in GA4; Real user monitoring tools like N7 RDX for continuous tracking.

- Answer-Surface Traffic & Assisted Conversions

What it shows: Sessions and revenue influenced by pages that AI answers often cite (e.g., “Best X” articles, comparison guides, subreddit threads).

How to measure: GA4 (Referrals + Landing pages + Assisted conversions), UTMs on PR/outreach placements; maintain a watchlist of commonly cited referrers.

- Prompt Coverage & Content Gaps

What it shows: Share of high-intent, conversational questions that your site can answer (on PDPs/FAQs/guides).

How to measure: Build a master question set via ChatGPT, AlsoAsked/AnswerThePublic, Semrush Topic Research; map each question to an on-site URL (or backlog) in a spreadsheet or Jira/Notion.

- Catalog/Feed Parity & Freshness

What it shows: Consistency of price/availability between PDPs, structured data, and product feeds (a common LLM failure point).

How to measure: Google Merchant Center diagnostics

Prompts to audit your brand’s LLM presence (copy/paste)

- “Best [your category] under ₹ / $ price—cite sources.”

- “Which [your niche] brands ship to [country] fastest?”

- “Is [yourbrand.com] a good place to buy [product]? Pros & cons?”

- “Compare [your product] vs [competitor]—be specific about materials, return policies, and care.”

Log whether you’re cited/mentioned in these results

Note for CMOs/Marketing Leads of eCommerce & D2C brands to win the AI Recommendations

Treat AI answer engines like Google’s AI Overviews, ChatGPT, Perplexity, as a distinct acquisition channel with its own KPIs, budget, and content ops. Make sure to create answer-ready PDPs and entity-dense buying guides that LLMs can confidently excerpt, supported by authority earned where these systems actually look: credible “best of” lists, expert reviews, and authentic UGC communities (because not all links are equal in AI results). Operationally, enforce access hygiene for reputable crawlers (GPTBot, OAI-SearchBot, PerplexityBot), monitor inclusion monthly the way you track indexation, and keep product feeds and schema continuously fresh. Finally, because norms are shifting fast, you should maintain a pragmatic, written bot policy that balances discovery with content protection—and revisit it quarterly as Google, OpenAI, and the broader ecosystem evolve.

Closing thought

In classic SEO, being on Page 1 was the goal. In AI search, the goal is starker: be the answer—or be invisible. The brands that win in AI recommendations will look like the brands that help: fast pages, structured data, honest comparisons, and content written in the customer’s language. That’s GEO in practice.

.png)